1.) The great challenge for every nation, every army, every business, and every household is one of staffing. There are never enough hands to go around. Over-population is a 20th century meme arising from conservation. The real issue has historically been and may now re-enter the spotlight as under-population.

- Nations with small and shrinking populations lose power. In extremis, they cease to be nations.

- US Army commercials advertising prospective soldiers as being an Army of One? Willful perpetuation of Hollywood illusions. A real army is about logistics, team, and travel. A true test of a general is not standing fast with 300 against overwhelming odds. Instead, it is of attracting and retaining millions of followers, feeding them, training them, and transporting them.

- A household of one is doomed in the long run and limited in the short run. While there are not 5 kids to feed and diaper, there are also not 5 future adults to run the family business.

2.) The Internet was a DARPA (Defense Advanced Reseach Projects Agency; military) way of distributing computing resources and removing centralized physical bottlenecks vulnerable to massive physical attack. Once the concept was released to the general public, it became a means of sharing information more easily. Instead of family and friends learning about planting wheat from the local expert Farmer Bob, family and friends can now learn from a global community of expert farmers who documented and made public their wheat farming techniques. The Internet is a way of elongating the range that information can travel.

Combined with advances in sensor technology, namely the cameras, microphones, gyroscopes, and motors most obviously packaged in our ubiquitous smart phones, it is an obvious step to spread sensors over longer distances. More sensors spread over longer distances on more objects forms the Internet of Things.

Taken together, the Internet of Things promises to make us all-seeing, all-knowing beings. By having access to all the world’s libraries and books, everyone can search the most obscure line from Shakespeare’s sonnets to how to construct an origami rose. By having access to personal cameras, microphones, and motor actuators, everyone can see and interact with environments halfway around the world as with tele-presence. One person can monitor hundreds of miles of land, be they wheat growing farmers or security personnel. By embedding transportable objects with sensors, analysts can track who buys which products for what purpose and physicians can track patient movement and diet. Cars can even drive themselves with sufficient sensors and actuators to safely transit from point A to point B. (McKinsey & Company, 2010)

It appears on the surface that the Internet of Things can provide the answer to the staffing problem. Each person can be connected and magnified such that 10 people can do the work of a 1000. With enough data flowing through the sensors and onwards towards a processing center, it matters not that a business or household is understaffed. Automation and connected sensors reduces the necessary staffing. The possibilities encompass a Kurzweillian Singularity where technology augments and extends peoples’ bodies until it saturates the universe.

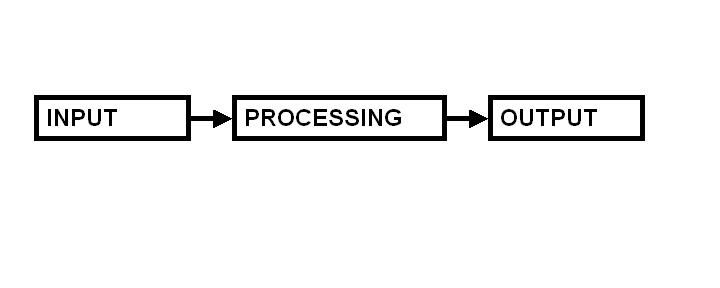

As a doctorate in brain and business intelligence, it falls to us not to get swept up in a blind fervor and instead to truly open our senses and examine this concept further for challenges in implementation. The basic premise relies on the Input-Processing-Output flow.

This basic flow diagram shows up virtually all disciplines including:

- Computer Science (Data -> Algorithm -> Output)

- Medicine (Drug -> Metabolism -> Outcome)

- Manufacturing (Raw Materials -> Factory Value Added -> Product)

- Accounting and Finance (Inventory -> Marketing and Sales -> Profit).

Translating into the Internet of Things is this:

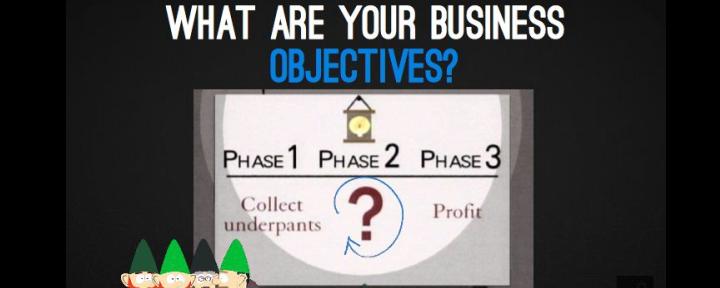

- Sensors on Things -> ??? -> Singularity

This reminds the pop culture viewer of a certain South Park episode:

What is the second phase? What happens in accounting when a firm announces it has acquired a vast amount of inventory? What happens to a manufacturing firm that has acquired vast stockpiles of raw materials? What happens to a patient that has injested vast quantities of a drug? In all of these cases, the firm unequivocally is in deep trouble (inventory is expensive to hold) and the patient under the best-case scenario needs the Emergency Room. Computer Science and Technology, especially the sub-field of machine learning, may be the only area in which a glut of data may be viewed as beneficial. Unfortunately, exploring this perspective further even shows it to be flawed. The keyword here is Sensor Fusion.

The Internet of Things applied to Sensor Fusion manifests in two prominent activities: drones (AKA Remote Piloted Vehicles) and fighter jets. A drone is a flying camera, a perfect example of a “thing with a sensor attached.” A modern fighter jet’s expense is mystifying until examination of line item costs shows an increasing portion goes towards its sensors. In both cases, simply sticking more sensors on a jet or more drones in the air does not make for improved situational awareness or smarter decisions.

My old department in my alma mater was heavily funded by DARPA, with public non-classified projects. One of my colleagues was involved in helping design the cockpit warning klaxons to differing levels of attention grabbing pitches. For example, a low oil warning should get the pilot’s attention, but not nearly as much as a hostile radar lock. The implication was clear. With so many different gauges all competing for the pilot’s attention, less is more.

Another of my colleagues was involved in remote sensing. Given a set of expert labeled satellite image grids with the classifications including natural formation, man-made formation, car, road, building, truck, and other vehicle, extend the labeling to the raw image grids. The implication here was also clear. With 50 – 100 drone flights per day, each lasting tens of hours, there is a glut of aerial video images. What is needed is more analysts, not more drones.

Sensor Fusion is a decades-old concept. Succinctly, it attempts to merge disparate sensor processing such that the whole picture is clearer and more accurate than the sum of its components. Let us run with the modern fighter jet concept, especially since it dovetails with popular culture so nicely.

The F-14 Tomcat US Navy plane is a large, swing-wing, 2-seater fighter craft. In the movie Top Gun (starring Tom Cruise and Anthony Edwards), Tom Cruise is the hotshot pilot. Anthony Edwards is the rear sear RIO (Radar Intercept Officer). The plane takes off, does some fancy flying courtesy of Tom Cruise’s character, and saves the day. Anthony Edwards’ character as the back seat flyer seems to be there for merely the ride, commentary, and audience foil by looking wide-eyed out the back seat. Credits role, and popcorn is emptied. The discerning viewer may be given to ask, “Why exactly is there a back-seater on that fabulous airplane? What exactly does he do there?”

Late World War II airplanes were inexpensive (about $500,000 in today’s money) with engine, frame, guns, and hydraulics. The sensor equipment consisted of smooth cockpit glass, a pair of pilot eyeballs, and a radio. An F-14 in contrast costs about $50 million in today’s money. Seriously. A single F-14 cost as much as over 100 World War II Mustangs. Why? The F-14 has a gigantic radar embedded in its nose, easily accounting for over a quarter of the entire F-14 price tag. The radar acts as a long range, beyond visual range flashlight to illuminate the enemy air force. Eyeballs see about 3-5 miles on a good day. The radar goes out to about 100. So far so good. Just put on a scope like in a video game and paint a red dot for the bad guys.

Except that a radar that illuminates out to 100 miles is going to paint every single passenger jet, fighter jet, bomber, tree, building, mountain, rock, bird, and insect in its way. There would be easily over a million targets. So the radar algorithm needs to include a series of filters. One filter for example might be to take into effect the F-14’s movement. If the jet is flying at 500 knots, then any radar object approaching at exactly 500 knots is stationary and can be filtered out as being a ground or natural weather object. But since the radar is so powerful, any opposing fighter could detect that it is being tracked and simply turn 90 degrees directly perpendicular. Et voila! The opposing fighter just disappeared from the F-14 radar since the closing rate is now exactly 500 knots.

There must be literally hundreds of different filters and hundreds of different tricks to counter each filter. A moment’s thought and imagination on this topic yields the case that the backseat RIO, Anthony Edwards, might be even busier than the hotshot Tom Cruise pilot. Long before the F-14 comes into visual range a la the movies, the radar operator in the backseat has been playing a very intense game of cat and mouse tracking opponents and shielding the crew from being tracked. Intuitively, one has to wonder why else was the F-14 was designed at such great expense to carry a second crewmember.

The F-14 was a 1970’s era fighter jet. Today’s technology allows us to embed orders of magnitude more powerful and complex sensors and radars. But simply putting on more sensors (i.e. an Internet of Things) on a modern fighter jet is going to overwhelm the crewmembers’ workload with too much data. There must be 20 more video screens and a hundred more audio alerts to monitor. The real challenge in a modern fighter jet is not about having too few sensors needing the Internet of Things. Rather, it is about being understaffed and overworked.

Sensor Fusion is not simply fusing sensors into one cluttered video monitor. If there are 20 different Internet linked sensors, then there are 20 different data input streams. They produce a Tower of Babel regardless if they are on 20 different screens or overlaid on one single screen. Sensor Fusion is clustering and consolidating. If there are 20 different voting sensors, Level 1 Sensor Fusion may cluster by reporting only the spatially correlated votes and discard the outliers. Higher Level 2 Sensor Fusion may attempt to integrate the sensors over time and weigh more heavily those sensors that a posteriori provided higher quality tracks. Higher levels may exhibit ever more advanced clustering approaches. In all cases, the Sensor Fusion is about trimming and filtering the number of sensors to fit the number of decision makers. In essence, its goal is smarter sensors, not more sensors.

Under the Input -> Processing -> Output flow, the human staff do the Processing still today as before. The perpetual under-staffing issue means the Processing is the bottleneck. Adding more sensors to everything provides growth in theory, but in practice adds more to an already glut of Inputs.